How to deploy the Lab and Workshop guide

You likely already have the necessary environment set up to run this workshop. However, if you want to recreate it on your own, follow the instructions below to prepare your environment.

The "AI Specialist" module only requires the Cloud/Core Datacenter infrastructure, while the "Platform Specialist" needs both the Cloud and the Edge sides.

Cloud/Core Datacenter

OpenShift

| This workshop was prepared to be deployed in OpenShift 4.18 and OpenShift AI 4.19 |

This workshop requires an OpenShift cluster. You might already have one or, if you have access to the Red Hat Demo Platform, you can order either the "RHOAI on OCP on AWS with NVIDIA GPUs" lab, if you want or not GPUs, or the "Red Hat OpenShift Container Platform Cluster (AWS)" lab if you want an "empty" environment.

Lab Baseline

The number of workers required will depend on their size, but you may need at least four workers in the Red Hat Demo Platform (up from the default of three) to support all the infrastructure services for the workshop. Before deploying anything, ensure the environment is properly scaled—otherwise, the deployment may hang and never complete. If you run in an environment with GPUs you might want to create a worker MachineSet without GPUs to run all the infrastructure services(e.g. you can create 5 x m5a.4xlarge). Note that some services could be killed because of OOMKilled error, starting by the Gitea Operator.

|

The workshop requires several services and configuration (baseline) in OpenShift. You can prepare your environment easily by just importing some YAMLs in your environment:

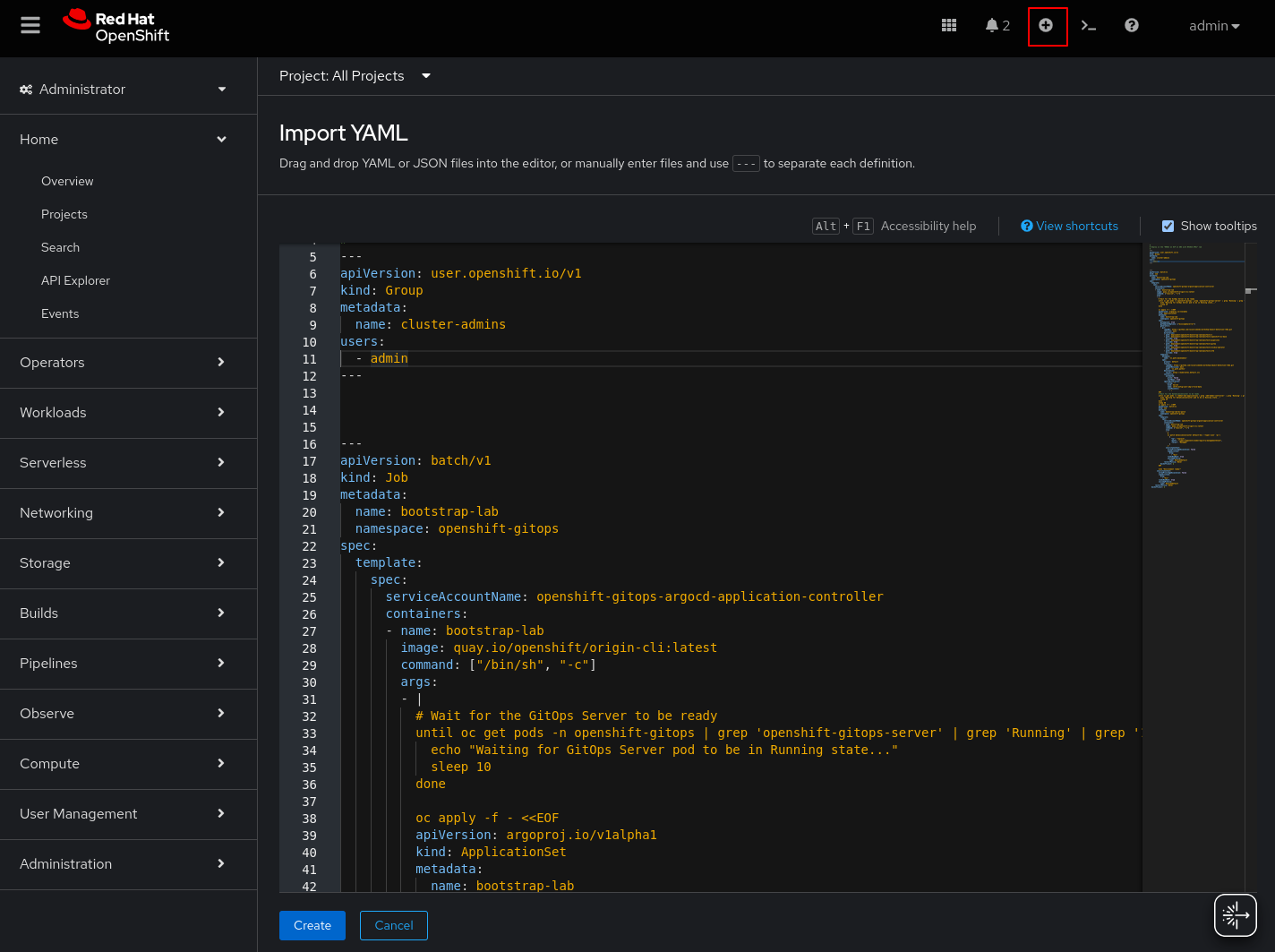

1- Navigate to OpenShift Console (admin user)

2- Click on the + sign and import the bootstrap-lap YAML. Choose the right YAML file depending on the platform where you are deploying. If using the Demo Platform, choose bootstrap-lab-rhpds-nvidia.yaml if you are using the "RHOAI on OCP on AWS with NVIDIA GPUs" lab as a base, or the bootstrap-lab-empty.yaml if using the "Red Hat OpenShift Container Platform Cluster (AWS)" lab.

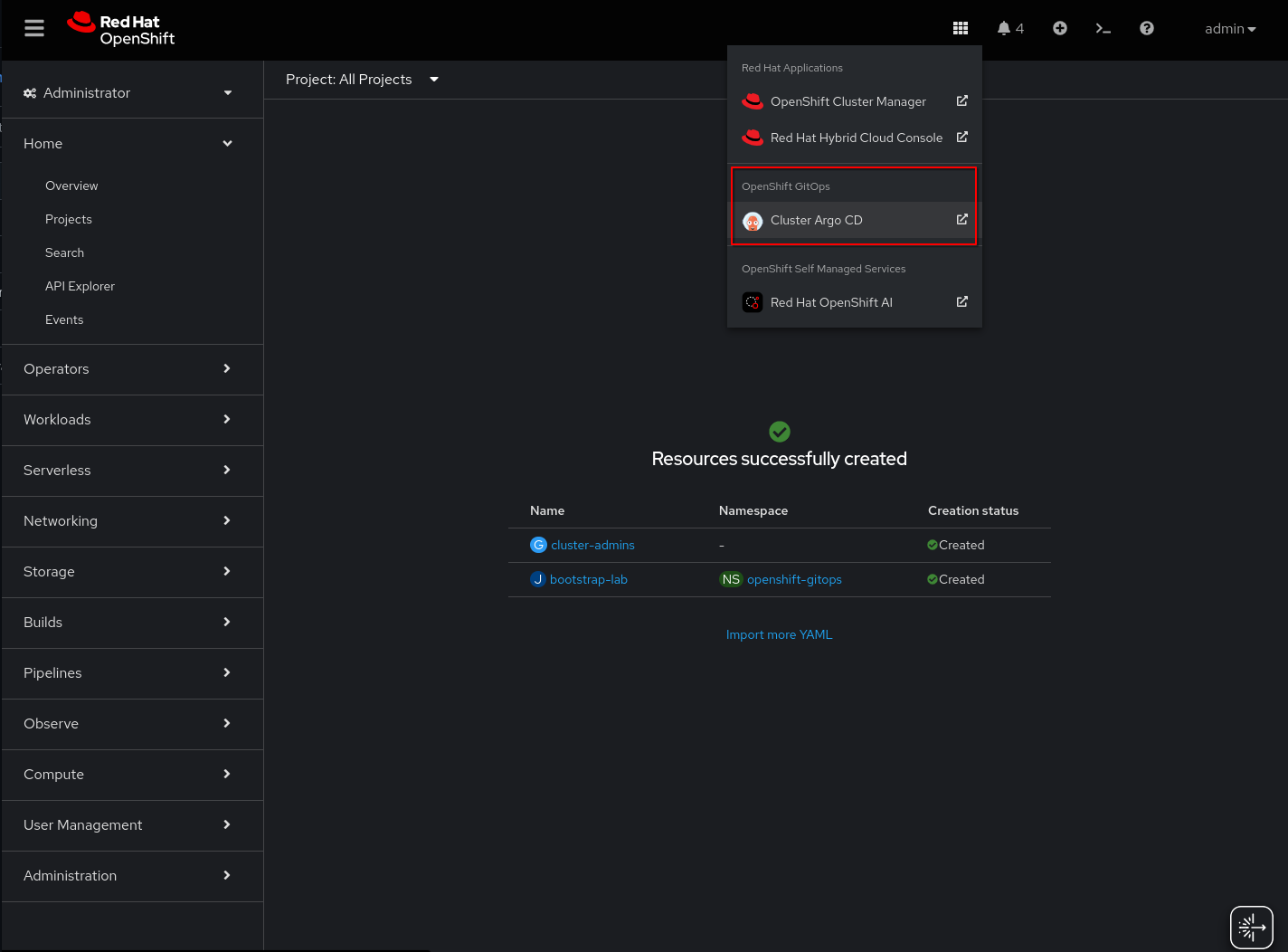

3- When the Argo CD access is ready, log in using the admin credentials ().

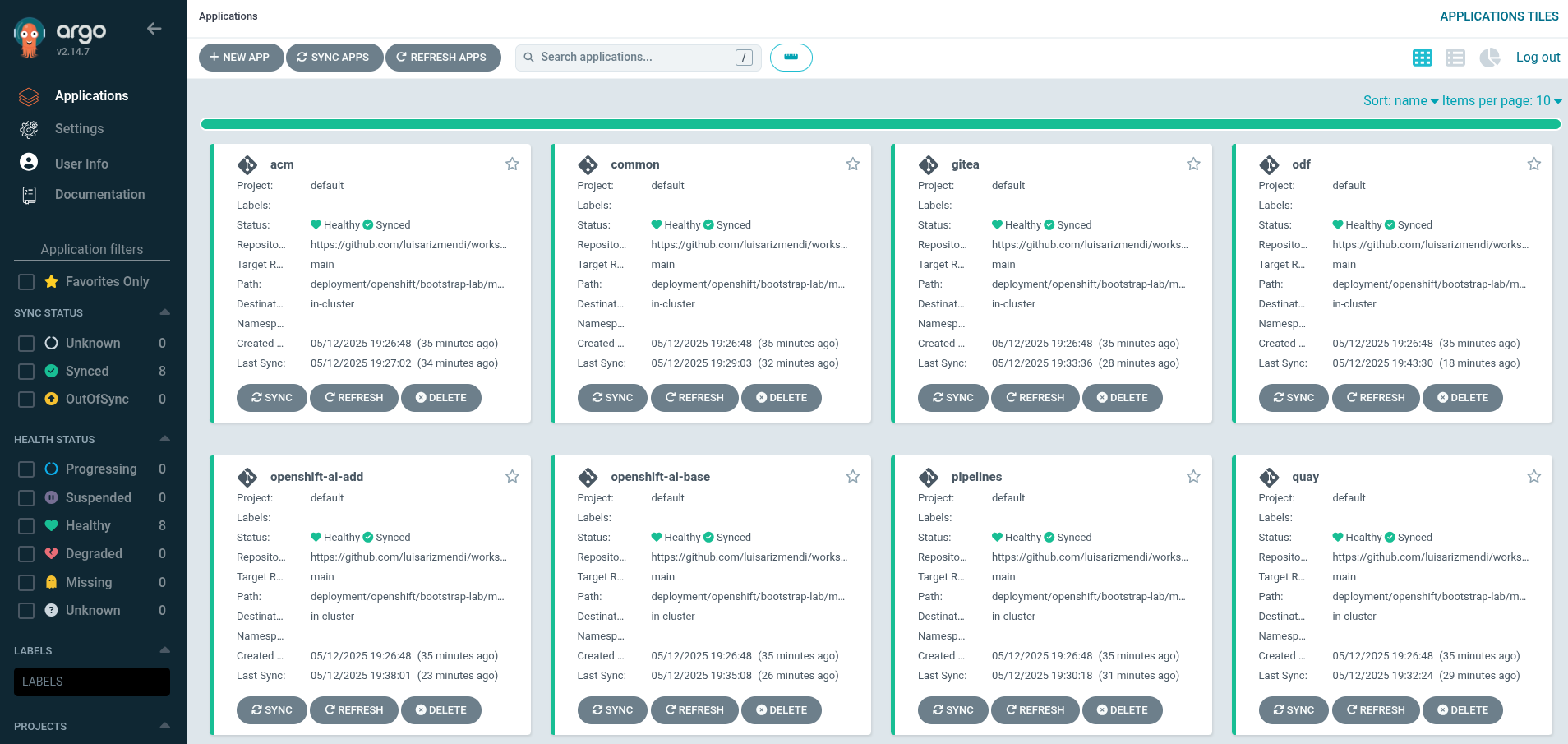

4- Wait until all Applications are in Synced state. It could take time (~30 min) to converge. If after that time you find any application not green try to re-sync it again.

| If you find that the deployment does not converge, first take a look at the "Installed Operators" and see if any is in failed state, then remove it and let GitOps retry the deployment automatically. |

With these YAMLs you deploy:

-

OpenShift Workshop user, groups, projects and guides

-

OpenShift AI with Model Registry

-

OpenShift Data Foundations

-

Quay Container Image Registry

-

OpenShift Pipelines

-

Advanced Cluster Manager with Red Hat Edge Manager

-

Gitea as Source Code Repository

-

NVIDIA GPU configuration (only if "GPU" bootstrap is used)

Edge Site

Gitea

Besides the Gitea deployed as Source Code Repository in the Cloud, there is another one at the Edge desgined as Edge Container Registry to minimize latency and bandwidth usage during the Workshop.

Remember that Gitea as other container registries, can do store multi-arch images, but it will still warn you if it stores images designed for a different arch.

To install this on a machine called A, make sure that you have sudo / passwordless access to A and add machine A to the inventory file. Same for the shared Nvidia Device that will used as first Image Builder machine.

Then install all needed collections on your machine:

$ ansible-galaxy install -r requirements.ymlThen install and configure Gitea on A using the playbook provided. The playbook also requires an accessible Nvidia Device that is designated as shared in the lab, for image building purposes.

$ ansible-playbook playbook.yml -i inventoryDNS - DHCP - Router Openwrt

I’ve reused a device I had at home and installed FriendlyWRT on it.

You will find the backup configuration in the folder.